A couple of months ago, during Build 2021, Microsoft introduced Azure Applied AI (https://www.youtube.com/watch?v=45we8bNPsBc). Since then, I got many questions about them and had a chance to read about them and play with some of them myself. That’s one of the reasons I decided to write that blog post. Another reason is to keep my initial impression written down, so I can go back to it later and check if my perspective changes.

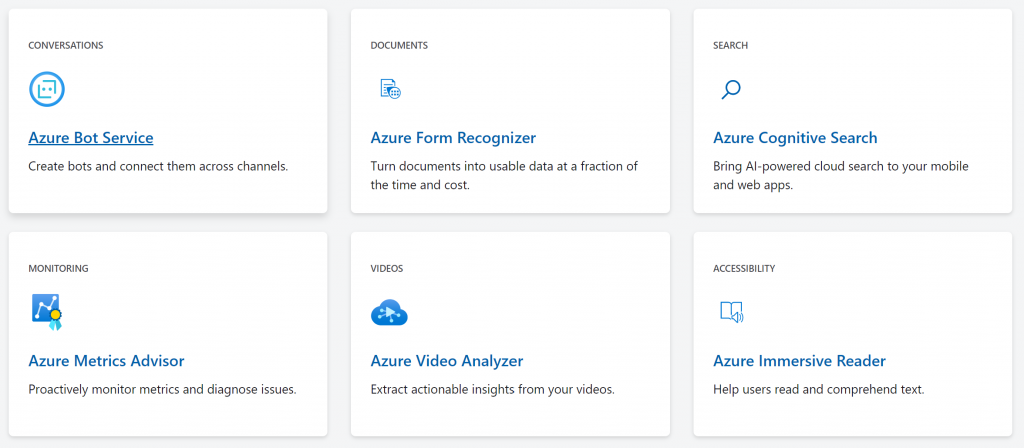

What are those Azure Applied AI services? They are sets of cognitive services combined to cover specific business scenarios. If you use one of the Applied AI services, you don’t need to create several Cognitive Service resources in Azure and write more code to make them work for your specific task. There’re currently six scenarios/Applied AI services: Bot Service, Form Recognizer, Cognitive Search, Metrics Advisor, Video Analyzer, Immersive Reader. Almost all of them were in some way a part of Cognitive Services, and then they just became too big to continue being in line with others. I noticed that most of the services with their own UI (not just an Azure resource, but its own portal) moved to the Applied AI group. Only Custom Vision and Language Understanding Intelligent Service are still under the Cognitive Services, which makes sense because they are relatively small, independent, and cover specific tasks rather than the whole scenario.

Applied AI Services

Applied AI Services

You can recreate yourself using available cognitive services and some custom code for five out of six services I mentioned above. The Bot Service was the only one that was never a part of the Cognitive Services and was represented mainly by different combinations of Web App Bot, LUIS, and QnA maker Azure resources. Also, that service doesn’t have a separate portal; instead, it has the whole app you can install on Windows, Mac, and Linux – Bot Framework Composer (https://docs.microsoft.com/en-us/composer/). Azure Bot resource now can be automatically opened in the Composer too.

I still remember the whole big Search group in the Cognitive Services suite; it contained all kinds of searches: news, image, text, etc. Now the Cognitive Search from the Applied AI suit can perform all those searches plus auto-complete, geospatial search, OCR, and entity recognition. That makes it way less confusing and more convenient to use for all-purpose searches.

The Video Analyzer service came from a combination of Computer Vision and Video Indexer Cognitive Services, containers, and other Azure resources. This Applied AI service is similar to the Video Indexer but has more capabilities. I found an article written a couple of years ago describing a custom solution for video analysis using the Computer Vision service; https://www.cloudiqtech.com/real-time-video-analysis-using-microsoft-cognitive-services/. Now you need to connect your IoT-enabled device with the Video Analyzer service, which is way more convenient.

Another former Cognitive Service and now Applied AI service is Azure Form Recognizer. Form Recognizer was relatively straightforward: you train your model using the console and retrieve data from forms. The primary way of interaction with the service was through REST API. New Form Recognizer has the whole portal build on top of that API: https://fott-2-1.azurewebsites.net/prebuilts-analyze (the URL is from the official documentation). Using the portal, you can:

- use a pre-build model to work with images of forms: invoice, receipt, business cards, or ID

- use the Layout functionality to extract tables, text, structure elements of the document

- use the Custom functionality to train the model on your specific forms to retrieve key-value pairs from your business-specific documents

It can be a helpful service for financial and HR tasks for scanning and analyzing receipts and legal documents. But it also can be used for other tasks where scanned or photographed forms are used.

The Immersive Reader was also introduced and became generally available under the Cognitive Services umbrella. Unfortunately, I never had a chance to work with it, but I saw the demo portal/website, where the service was used for reading the text out loud and helping understand some language nuances. Last year Microsoft provided a list of companies that used the Immersive Reader service, so it can be interesting to know for reference: https://azure.microsoft.com/en-us/blog/empowering-remote-learning-with-azure-cognitive-services/. Immersive Reader is great when used in solutions for teaching kids, helping people with dyslexia (and similar disorders), and learning languages. There’s no portal for that service, but you can always access Immersive Reader through REST API and SDK. If you want to see how it works, there’s an option to try it here: https://azure.microsoft.com/en-gb/services/immersive-reader/#overview (closer to the middle of the page). I don’t see it widely used right now, but I can see how useful it might be for some language-focused scenarios.

The last Applied AI service is Azure Metrics Advisor. And, as you, probably guessed it used to be a Cognitive Service too. The initial Metrics Advisor introduction from Seth Juarez can be found here: https://channel9.msdn.com/Shows/AI-Show/Introducing-Metrics-Advisor. That service became way too big comparing to other Decision services and consists of several parts. It also has its own portal, where you can connect it to your Azure resource and data sources, see the graphs and analytics, perform a root cause analysis of anomalies, analyze multi-dimensional data from multiple data sources and even add hooks for notifications or kicking automated process. To use the portal, you need to create the Azure resource first, and then it can be connected here: https://metricsadvisor.azurewebsites.net/. I think that service can be helpful not only for developers integrating it with their solutions. It also can be used as a low code solution to monitor anomalies and analyze data. I think IoT solutions will be the ideal client for this service.

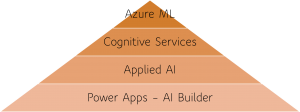

That was a quick overview of the new Applied AI group of tools provided by Microsoft. In the Microsoft AI solutions hierarchy, the Applied AI group is located between Power Platform low code/no-code options and the Cognitive Services. Here’s a visual for that:

Applied AI in the hierarchy of the Microsoft AI tools

Tools from those categories can be used individually or mixed with the ones from other groups. A mix-and-match approach is always a good idea for more extensive/ complex solutions and mixed teams, where not everyone can or wants to write code.

Overall I’m thrilled with the way Microsoft is making AI accessible for everyone: developers, managers, data scientists, etc. I also see that moving services around and renaming them can bring a lot of confusion. It makes sense to separate scenario-focused tools from task-focused ones. However, I hope Cognitive Services won’t migrate entirely, and we will all still have access to more lightweight options. As always: the more opportunities we have, the better.